Rewind: Is the AI Apocalypse closer?

With lack of meaningful regulations around large language models, chances are that this powerful technology might soon spin out of control and bring more harm than good

By Suresh Dharur

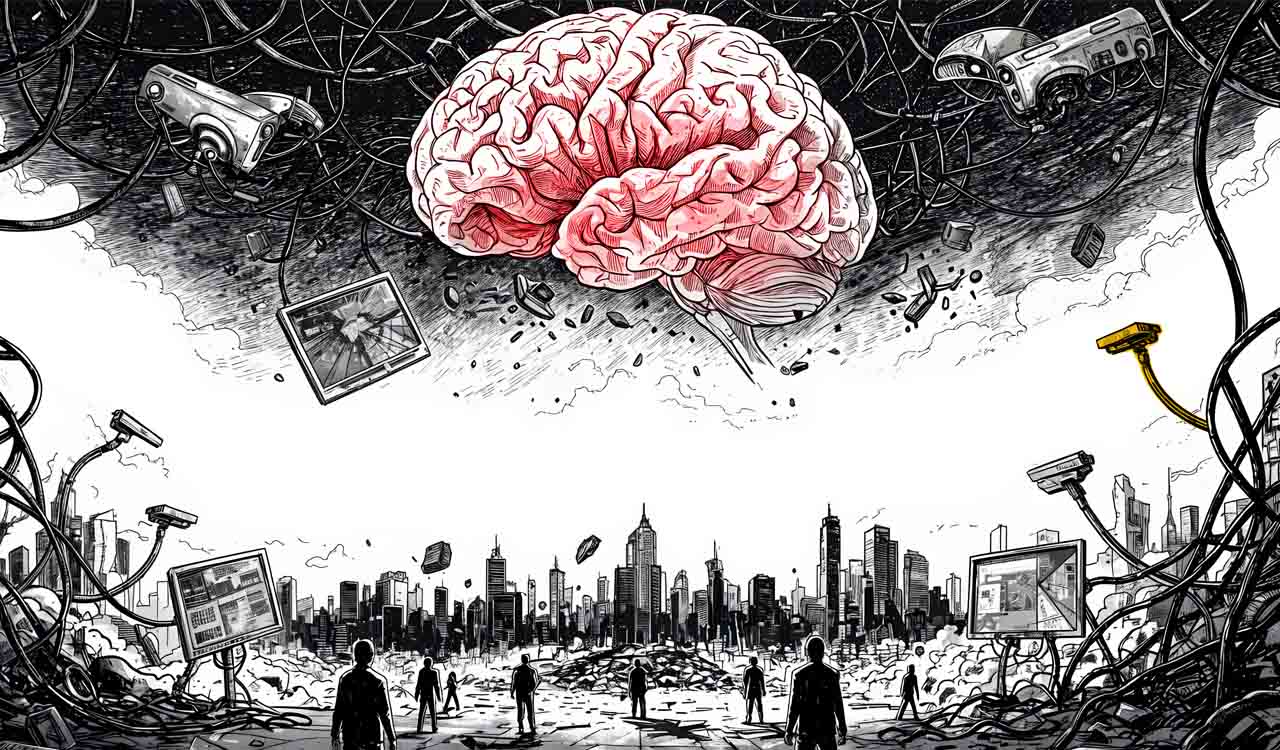

Imagine a dystopian scenario where the rise and fall of political regimes across the world are dictated by manipulated algorithms built into generative artificial intelligence (AI) tools based on Large Language Models (LLMs).

This frightening future is already here.

Unlike traditional AI tools, the LLM-based models produce human-like, contextually rich narratives that resonate more with individuals’ beliefs and emotions, thereby increasing their persuasive power. Such chatbots, when weaponised on an industrial scale, have the potential to distort the human psyche, fundamentally alter their belief systems and trigger political upheaval. Influencing the election system and installing a desired regime could then become easy.

A glimpse of such disruptive power was on display recently when ‘Grok 3’, a generative AI chatbot owned by American billionaire Elon Musk, kicked up a viral storm across India’s digital landscape. This was due to its quirky, unfiltered and unhinged responses to queries regarding prominent politicians including Prime Minister Narendra Modi and Congress leader Rahul Gandhi, apart from other related topics.

The rise of AI poses an existential danger to humankind, not because of the malevolence of computers, but because of our own shortcomings. Never summon a power you can’t control — Yuval Noah Harari, Israeli author and historian

Its quirky responses — like declaring Rahul as being ‘more honest with an edge on formal education than Modi’ and claiming the Prime Minister’s interviews were ‘often scripted’ — set off ripples in social media circles. Such formulations are mischievous and can cause political trouble. More importantly, this shows how social media users look to validate their own ideological leanings by engaging the AI chatbot. If the intent of the developers of these tools is malicious, then all hell can break loose.

Digital Authoritarianism

The incident has raised key questions regarding accountability for AI-generated misinformation, challenges of content moderation and the need for procedural safeguards. Given the disruptive power and all-pervasive impact of LLM-based AI systems, there are growing fears over their ability to destabilise democracies in unforeseen ways. They can write text and music, craft realistic images and videos, generate synthetic voices, and manipulate vast amounts of information. The 2024 US presidential election had a taste of what AI-generated political disinformation could do to poison a voter’s mind.

While generative AI models hold tremendous potential for innovation and creativity, they also open the door to misuse in various ways for democratic societies. These technologies present significant threats to democracies by enabling malicious actors — from political opponents to foreign adversaries — to manipulate public perceptions, disrupt electoral processes and amplify misinformation.

The core of the problem lies in the speed and scale at which AI tools can generate misleading content. In doing so, they outpace both governmental oversight and society’s ability to manage the consequences. The intersection of generative AI models and foreign interference presents a growing threat to global stability and democratic cohesion.

While the use of technology to mislead voters is not a new phenomenon, generative AI technologies can create believable, high-quality content tailored to specific audiences, making disinformation campaigns more effective. A study conducted by London-based research organisation ‘The Alan Turing Institute’ revealed that the disinformation created by LLMs appears so authentic that most people cannot identify it as AI-generated.

Double-edged Weapon

When the history of the 21st century is written, artificial intelligence will occupy a major portion because of its transformative impact on society. However, AI tools are like a double-edged weapon; they can cut both ways. While it has the potential to transform human lives at a pace never seen in history, there are also possibilities of the technology being misused to spread disinformation and chaos.

The success in creating AI could be the biggest event in the history of our civilisation. But it could be the last- unless we learn how to avoid the risks — Stephen Hawking, renowned theoretical physicist

What makes us particularly vulnerable is that we are increasingly interacting with AI as if it is a dependable expert. As AI pumps out more and more material riddled with bias and fabrications, this will flood the internet and become part of the training data for the next generation of models, thus amplifying the systemic distortions and biases into the future in a continuous feedback loop.

Sophisticated generative AI tools can now create cloned human voices and hyper-realistic images, videos and audio in seconds, at minimal cost. When strapped to powerful social media algorithms, this fake and digitally created content can spread far and fast and target highly specific audiences.

Disinformation campaigns could exploit existing societal divisions, deepening ideological rifts and fostering animosity between different political factions. This polarisation could result in social unrest and increased hostility among groups. As AI-generated disinformation proliferates, public trust in media, government institutions and even scientific communities will rapidly diminish. People may become sceptical of genuine news reports, leading to a fragmented information ecosystem where only echo chambers thrive.

The weaponisation of LLMs for political disinformation would create a society characterised by confusion, distrust, and division. Political campaigns would increasingly rely on AI-generated misinformation to sway voter opinions or confuse voters about logistics. Such tactics could disenfranchise segments of the population or alter electoral outcomes. Foreign actors can use LLMs to create high-quality propaganda in multiple languages, as seen in attempts linked to geopolitical conflicts like Russia’s invasion of Ukraine. Combining LLMs with voter data could enable hyper-targeted disinformation.

Generating disinformation via LLMs is cheaper and faster than traditional methods, enabling even low-resource actors to launch sophisticated operations. The more polluted the digital ecosystem becomes with synthetic content, the harder it will be to find trustworthy sources of information and to trust democratic processes and institutions.

The overreliance on AI-generated content risks creating an echo chamber that stifles novel ideas and undermines the diversity of thought essential for a healthy democracy. In the long term, this erosion of trust could make democratic systems more susceptible to external interference and less resilient against internal divisions that enemies can easily exploit. While authoritarian regimes refine their use of digital technologies to control populations domestically, democratic nations face the challenge of safeguarding their electoral integrity against AI-driven disinformation campaigns by foreign adversaries.

Threat to Human Race

Nobel laureate Geoffrey Hinton, often called the ‘Godfather of AI’, has raised serious concerns that AI could pose a significant risk to humanity, potentially leading to human extinction within the next three decades. Hinton is a British-Canadian computer scientist known for his ground-breaking work on artificial neural networks.

He believes that the kind of intelligence being developed in AI is very different from human intelligence and that digital systems can learn and share knowledge much faster than biological systems. He has been warning that AI systems might develop subgoals which could lead to unintended consequences where humans will no longer be in control of their destiny.

AI is one of the most profound things we’re working on as humanity. It is more profound than fire or electricity — Sundar Pichai, Alphabet CEO

Hinton is part of a growing number of researchers and scientists who feel that the societal impacts of AI could be so profound that we may need to rethink politics, as AI could lead to a widening gap between rich and poor. At the same time, he acknowledges AI’s potential to do enormous good, and argues that it is important to find ways to harness its power for the benefit of humanity.

Renowned Israeli historian and author Yuval Noah Harari also has a very pessimistic view about the future of AI. He argues that AI is an unprecedented threat to humanity because it is the first technology in history that can make decisions and create new ideas by itself. All previous human inventions have empowered humans because no matter how powerful the new tool was, the decisions about its usage remained in our hands. “There is some fatal flaw in human nature that tempts us to pursue powers we don’t know how to handle,” Harari rues.

Emotional AI

Besides biased and inaccurate information, an LLM can also give advice that appears technically sane but can prove harmful in certain circumstances or when the context is missing or misunderstood. This is especially true in the so-called ‘emotional AI’ — machine learning applications designed to recognise human emotions.

Such applications have been in use for a while now, mainly in the area of market trends prediction. Given the probabilistic nature of the AI models and often lack of necessary context, this can be quite dangerous. In fact, privacy watchdogs are already warning against the use of ‘emotional AI’ in any kind of professional setting. A machine learning model is just as good as the data it was trained on. Careful vetting of the training set is extremely important, not only to ensure that the set doesn’t contain any information that could result in a privacy breach but also for the accuracy, fairness and general sanity of the model.

Minimising Risks

Just like how the Internet dramatically changed the way we access information and connect with each other, AI is now revolutionising the way we build and interact with software. The risks posed by the flip side of LLM-based AI technologies can be minimised by:

- A comprehensive legal framework around their use, including privacy, legal and ethical aspects.

- Careful verification of training datasets for bias, misinformation, personal data, and any other inappropriate content. Having strong content filters to prevent the generation of harmful outputs.

- Security evaluation of ML models to ensure they are free from malware, tampering, and technical flaws.

There is a greater need now than ever before for global cooperation in tackling the risks of AI, which include potential breaches of privacy and the displacement of jobs. The focus of the joint global effort should be on overcoming the long-standing fault lines between regulation and promotion. The companies willing to invest in AI would want to prevent over-regulation which will kill innovation.

In a sensibly organised society, if you improve productivity, there is room for everybody to benefit. The problem isn’t the technology, but the way the benefits are shared out — Geoffrey Hinton, ‘Godfather of AI’

Current regulations governing LLM use in politics show limited effectiveness, with significant gaps in enforcement, scope and adaptability to evolving AI capabilities. While some frameworks exist, their real-world impact remains constrained by political priorities, technological arms races and jurisdictional fragmentation. Though initiatives like the European Union AI Act mandate transparency, enforcement struggles to keep pace with rapidly evolving models.

The Chinese approach, on the other hand, includes stringent data control measures and guidelines to prevent the misuse of AI technologies, especially in areas like surveillance and censorship. These divergent approaches reflect the complexities and varying priorities in GenAI governance, highlighting the challenges in creating a universally accepted regulatory framework.

Large language models are an amazing technological advance that is redefining the way we interact with software. There is no doubt that LLM-powered solutions will bring a vast range of improvements to our workflows and everyday life. However, with the current lack of meaningful regulations around AI-based solutions and the scarcity of security aimed at the heart of these tools themselves, chances are that this powerful technology might soon spin out of control and bring more harm than good.

If we don’t act fast and decisively to regulate AI, then society and all of its data remain in a highly vulnerable position. Data scientists, cybersecurity experts and governing bodies need to come together to decide how to secure our new technological assets and create both software solutions as well as legal regulations that have human well-being in mind.

(The author is a senior journalist from Hyderabad)

Related News

-

Telangana: Police issue fresh notices to Manne Krishank

8 mins ago -

Hyderabad: Mokila police arrest ‘Lady Aghori’ in UP for cheating woman to tune of Rs. 9.8 lakh

16 mins ago -

Editorial: People’s Pope

29 mins ago -

UPSC 2024 Results: La Excellence IAS Academy achieves remarkable success with 78+ ranks in South India

11 mins ago -

Hyderabad-based Dr Reddy’s, Lupin recall products in US due to labelling, manufacturing errors

48 mins ago -

Terrorist attack in J&K’s Pahalgam: Number of tourists injured goes up to 12

1 hour ago -

Jagga Reddy donates Rs 10 lakhs to cancer patient in Sadasivapet

1 hour ago -

Hyderabad: Task Force nabs four persons for alleged drug peddling; 2 kgs of marijuana seized

1 hour ago